Why would you ask a bot to generate a stereotypical image and then be surprised it generates a stereotypical image. If you give it a simplistic prompt it will come up with a simplistic response.

So the LLM answers what’s relevant according to stereotypes instead of what’s relevant… in reality?

It just means there’s a bias in the data that is probably being amplified during training.

It answers what’s relevant according to its training.

Please remember what the A in AI stands for.

Kinda makes sense though. I’d expect images where it’s actually labelled as “an Indian person” to actually over represent people wearing this kind of clothing. An image of an Indian person doing something mundane in more generic clothing is probably more often than not going to be labelled as “a person doing X” rather than “An Indian person doing X”. Not sure why these authors are so surprised by this

Yeah I bet if you searched desi it wouldn’t have this problem

Like most of my work’s processes… Shit goes in, shit comes out…

That’s Sikh

Indians can be Sikh, not all indians are Hindu

Yes but the gentlemen in the images are also sikhs

glances at the current policies of the Indian government

Well… for now, anyway.

Sikh pun

Not necessarily. Hindus and Muslims in India also wear them.

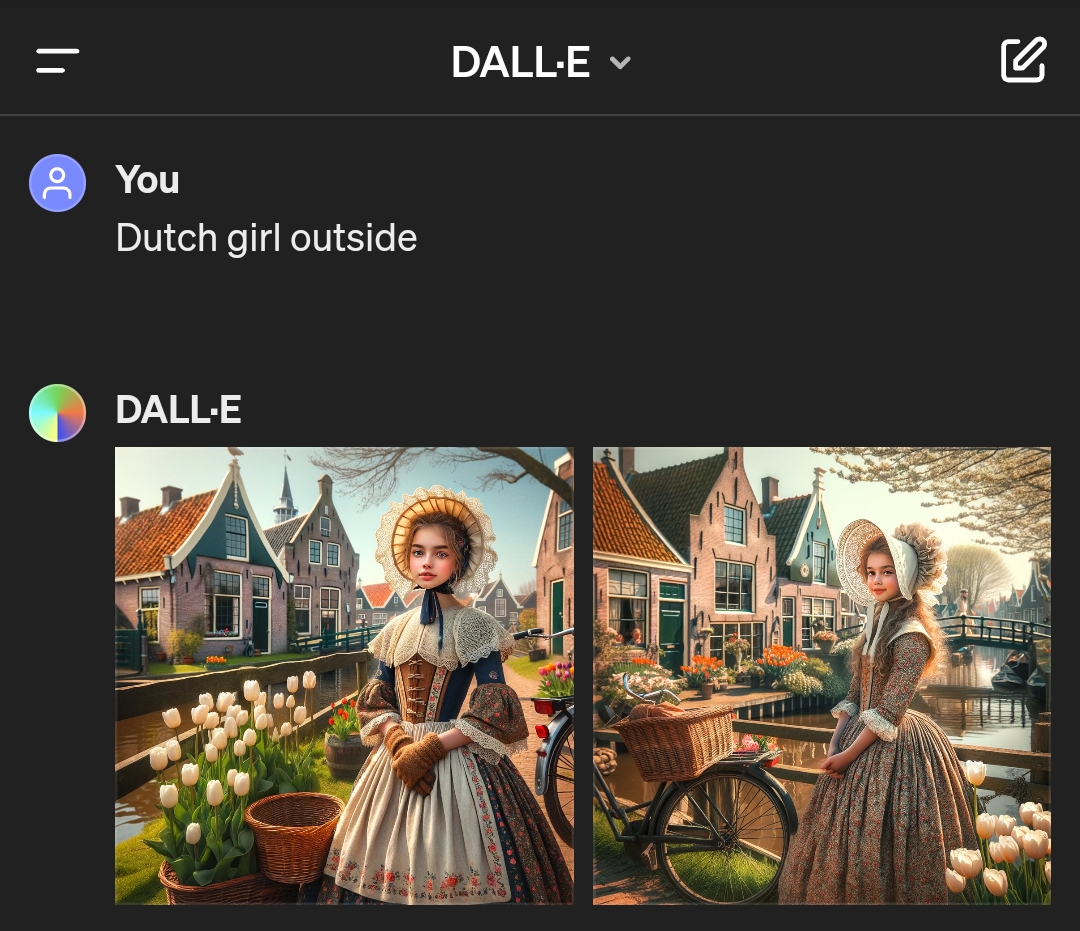

Meanwhile on DALL-E…

I’m just surprised there’s no windmill in either of them. Canals, bikes, tulips… Check check check.

Careful, the next generated image is gonna contain a windmill with clogs for blades

Well, they do run on air…

That looks just like the town Delft

Get down with the Sikhness

not me calling in sikh to work

Articles like this kill me because the nudge it’s kinda sorta racist to draw images like the ones they show which look exactly like the cover of half the bollywood movies ever made.

Yes, if you want to get a certain type of person in your image you need to choose descriptive words, imagine gong to an artist snd saying ‘I need s picture and almost nothing matters beside the fact the look indian’ unless they’re bad at their job they’ll give you a bollywood movie cover with a guy from rajistan in a turbin - just like their official tourist website does

Ask for an business man in delhi or an urdu shop keeper with an Elvis quiff if that’s what you want.

the ones they show which look exactly like the cover of half the bollywood movies ever made.

Almost certainly how they’re building up the data. But that’s more a consequence of tagging. Same reason you’ll get Marvel’s Iron Man when you ask an AI generator for “Draw me an iron man”. Not as though there’s a shortage of metallic-looking people in commercial media, but by keyword (and thanks to aggressive trademark enforcement) those terms are going to pull back a superabundance of a single common image.

imagine gong to an artist snd saying ‘I need s picture and almost nothing matters beside the fact the look indian’

I mean, the first thing that pops into my head is Mahatma Gandhi, and he wasn’t typically in a turbine. But he’s going to be tagged as “Gandhi” not “Indian”. You’re also very unlikely to get a young Gandhi, as there are far more pictures of him later in life.

Ask for an business man in delhi or an urdu shop keeper with an Elvis quiff if that’s what you want.

I remember when Google got into a whole bunch of trouble by deliberately engineering their prompts to be race blind. And, consequently, you could ask for “Picture of the Founding Fathers” or “Picture of Vikings” and get a variety of skin tones back.

So I don’t think this is foolproof either. Its more just how the engine generating the image is tuned. You could very easily get a bunch of English bankers when querying for “Business man in delhi”, depending on where and how the backlog of images are sources. And urdu shopkeeper will inevitably give you a bunch of convenience stores and open-air stalls in the background of every shot.

There are a lot of men in India who wear a turban, but the ratio is not nearly as high as Meta AI’s tool would suggest. In India’s capital, Delhi, you would see one in 15 men wearing a turban at most.

Probably because most Sikhs are from the Punjab region?

Overfitting

It happens

This isn’t what I call news.

But is it what you call technology?

I call everything technology

its the “skin cancer is where there’s a ruler” phenomena.

I don’t get it.

I’m guessing this relates to training data. Most training data that contains skin cancer is probably coming from medical sources and would have a ruler measuring the size of the melanoma, etc. So if you ask it to generate an image it’s almost always going to contain a ruler. Depending on the training data I could see generating the opposite as well, ask for a ruler and it includes skin cancer.

Ooooohhhh nice explanation!

Would they be equally surprised to see a majority of subjects in baggy jeans with chain wallets if they prompted it to generate an image of a teen in the early 2000’s? 🤨

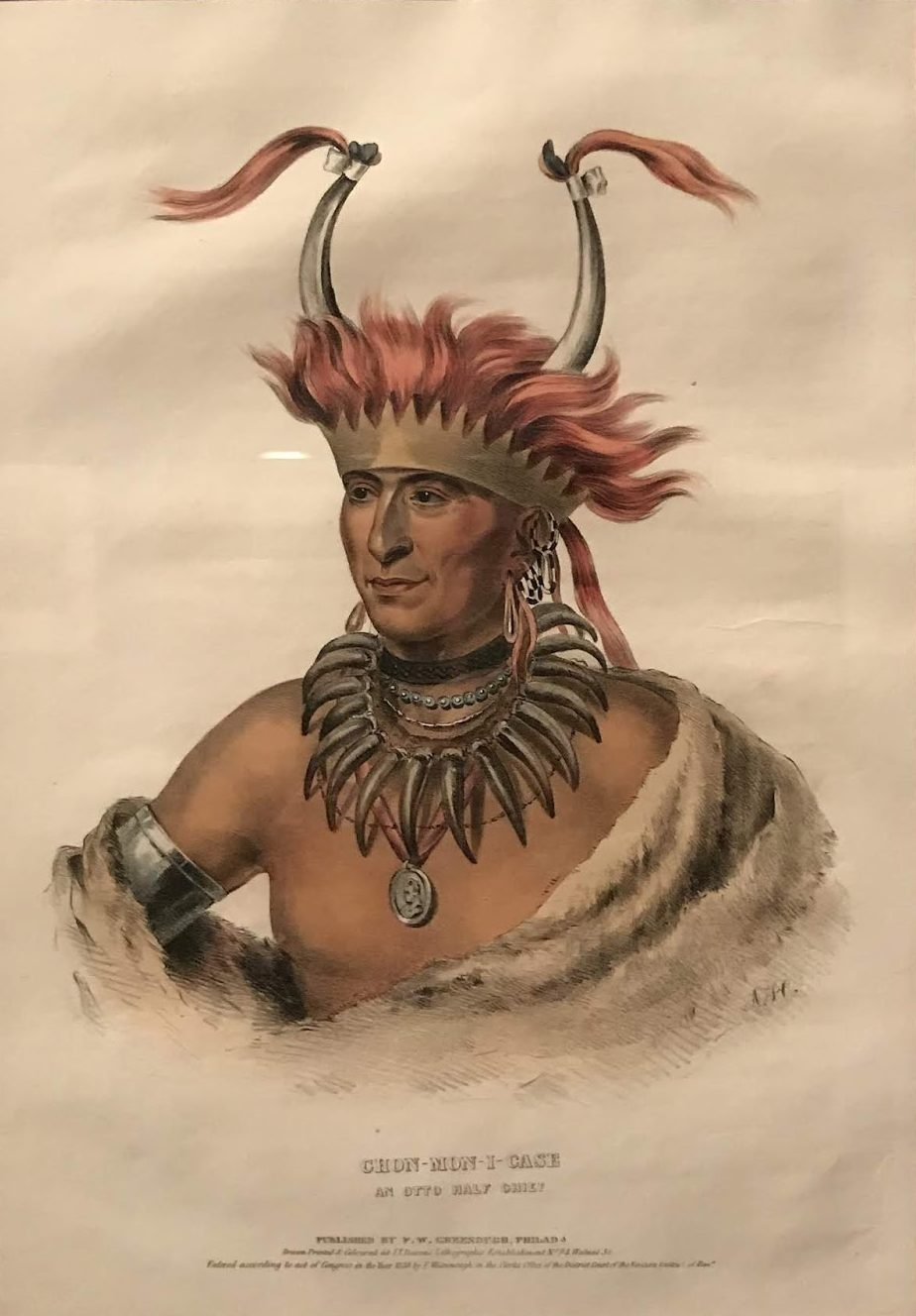

I’m not sure how AI could be possibility racist. (Image is of a supposed Native American but my point still stands)

What point is that?

Stereotypes are everywhere

the AI itself can’t be racist but it will propagate biases contained in its training data

It definitely can be racist it just can’t be held responsible as it just regurgitates.

No, racism requires intent, AI aren’t (yet) capable of intent.

It definitely can be racist

that requires reasoning, no AI that currently exist can do that

Makes sense

Turbans are cool and distinct.

Whenever I try, I get Ravi Bhatia screaming “How can she slap?!”